It’s a loaded question: How good does your data need to be?

If we’re honest with ourselves, we must admit that we’re not perfect. Nor are the systems we create to help minimize error perfect; therefore, how could our data be? Technology dramatically helps us improve the quality of our data, but there are no guarantees there either.

A recent Harvard Business Review publication shared that only 3% of companies’ data meets basic quality standards. Respondents were referring to the data upon which they rely on for accurate business decisions and effective workflow.

In our world of post-acute care delivery, one way the question of data accuracy translates is how we assess the resident and create a care plan. It then extends to what systems we put in place to deliver that care, how we evaluate its effectiveness, and ultimately communicate that with the outside world.

By outside world, I’m referring to families, regulators, fiscal intermediaries, REITs, payers, advocates, referring organizations and downstream care providers, to name a few. Let’s call them stakeholders — I like that word.

Most skilled nursing facilities have a data quality strategy in place. It’s required. But fewer have systems that ensure that the strategy is working. Sure, we have triple-check, and even quadruple-check; most of these strategies rely on key individuals in the SNF doing the “right thing,” or assurances from software vendors’ sales and marketing teams. Is that enough?

It is not.

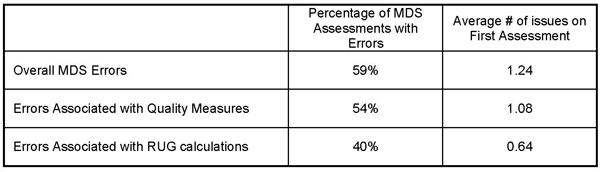

To illustrate this point, we conducted an analysis of 1.4 million MDS assessments submitted to us between Q1 2016 and Q3 2017. These were data submitted for verification prior to submission to CMS. Then the interdisciplinary team, generally led by the MDS coordinator, received real-time feedback on likely errors in the MDS and corrections were made. Below are the results.

In brief, 100% of SNFs in the study submitted MDS data with errors. One hundred percent.

So, what? Bad data wastes your valuable time, distorts your public profile and worse — it leads you astray when planning resident care and QAPI activities. Many post-acute pundits presume that errors in “RUG items” are an artifact of “upcoding. In fact, this is not true. Generally, these errors were lowering case mix, omitting acuity and care provided.

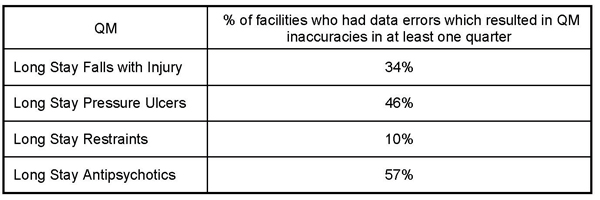

Data errors associated with Quality Measures have tremendous consequence that goes beyond the obvious. We explored some of them in this same study. It’s not just that errors caused false positives (QMs that trigger when they should not have) or false negatives (QMs that don’t trigger when they should have), the more subtle differentiators of who was high- and low-risk were also affected.

The table below quantifies the impact of data errors on four of the Quality Measures.

Even though all QMs showed similar results, I chose to focus on just four QMs to tie in another concept I wrote about a few months ago. These measures are used in Texas’ QIPP program. Not only are errors impacting the clinical profile for these facilities, and causing inaccurate care plans, they are potentially reducing value-based purchasing reimbursement. The negative consequence of bad data has a significant multiplier.

So really, how good does your data need to be? What is your acceptable error rate on any particular dataset? What is the ultimate consequence of not reaching your threshold? In the HBR publication I referenced above, they offer up the “rule of ten.” Simply put, multiply your costs to complete any unit of work by 10. That is the consequence of bad data.

Now, exactly how much does that QAPI or care plan cost?

Steven Littlehale is a gerontological clinical nurse specialist, and executive vice president and chief clinical officer at PointRight Inc.